This is a guest blog from Tom McBride, Director of Evidence at the Early Intervention Foundation. The Early Intervention Foundation is a member of the What Works Network.

The world needs more high-quality evaluation. This might seem like an unsurprising position for the Director of Evidence at a What Works Centre to take, but here at EIF we believe the case is clear and pressing.

Put simply, without good impact evaluation we don’t know if a service, programme or practice is achieving its intended aims and improving outcomes for those who are receiving it. Or to put it another way, we don’t know if it works.

Recently, I have been part of several discussions about whether austerity has increased the need for good quality evidence on what works. When public finances are under pressure, the argument goes, it is critical that they are used effectively. Now, I agree with the sentiment, but my view is that evidence is critical to the delivery of effective public services no matter how much money is available.

At EIF our mission is to ensure that effective early intervention is available and is used to improve the lives of children and young people at risk of poor outcomes. When the state steps in to support children then those children, their families and the taxpayer alike deserve to know that the services they are receiving are likely to work because they are underpinned by good evidence.

The argument that medicine should be evidence-based does not stand or fall on the level of funding available for testing or evaluation – why should social policy be any different?

Improving child outcomes with the help of strong evidence

Nevertheless, designing and delivering high-quality evaluations is hard – and arguably especially so for family and children’s services, which are usually delivered within complex environments.

Here at EIF we want to be part of the solution, not part of the problem, and so we are developing a range of content and resources to support those who wish to build their understanding of the effectiveness of services.

Over the last few years we have been developing our approach to assessing the evidence underpinning programmes which aim to improve outcomes for children. We rate the impact of these programmes against our standards of evidence, in order to include them in our online EIF Guidebook for commissioners.

Evaluations can be undermined by some surprisingly basic issues

EIF has now assessed the evidence base of more than 100 programmes, so I think we are in a position to identify a number of issues which we see occurring frequently in evaluations and evaluation reports, and which reduce our confidence in the results.

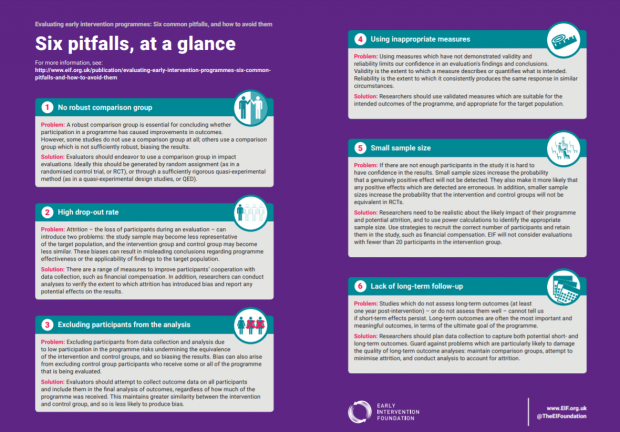

We have summarised these issues in Six common pitfalls and how to avoid them, our first output specifically designed to support programme developers and evaluators to deliver high-quality impact evaluations.

Some of the issues we highlight may seem quite basic – sample sizes that are too small, or a participant drop-out rate that is too high. But they are the ones we see a lot and which have the biggest detrimental impact on our assessment of a programme’s strength of evidence.

And avoiding these mistakes shouldn’t just be about achieving a higher rating on the EIF Guidebook: the more important point here is that these pitfalls undermine the confidence anyone can have in the findings of an evaluation. Avoiding these missteps makes it more likely that a programme can legitimately claim to work.

Supporting more evaluation, and better evaluation

Six pitfalls is the first step in an ongoing effort to support an increase in the quantity and quality of evaluation in the UK. This first resource is aimed at programmes which are well developed and a reasonable way along their individual evaluation journey. But we recognise that not all programmes and charities are in a position to conduct the sort of impact evaluation which we use for inclusion on the EIF Guidebook.

That is why my team will be developing further content for providers who are at an earlier stage of that journey, who need advice and support on areas such as developing a theory of change and logic model for their programme. We also recognise that the evaluation of services on a local level poses a number of specific challenges, and we will be highlighting these and offering advice on how to tackle them through our work.

Evaluation is hard, but good-quality evidence is a crucial element in improving outcomes for children. Evaluation of what you are doing today provides vital information for improving decision-making and shaping more effective services in the future. In an area like early intervention, where the UK evidence base is still small and the sector rapidly maturing, supporting more good-quality evaluation is a vital task for a What Works Centre to take on.

Tom McBride is Director of Evidence at the Early Intervention Foundation (EIF). Tom previously worked as a senior analyst in the Department for Education and the National Audit Office. He tweets at @Tom_EIF.

Leave a comment