This month has seen the end of the consultation period for the Ministry of Housing, Communities and Local Government’s (MHCLG) Integration Strategy. As usual, the green paper offers a chance for citizens to share their views on the Government’s vision, but what its readers might not notice is that some of the evidence featured in the green paper is notably different from previous consultations.

In March 2018, MHCLG published the results of a Randomised Controlled Trial (RCT) for a Community Based English Language (CBEL) Programme funded by Government. This was the first RCT ever undertaken by the Department, and the first time that a project like CBEL had been evaluated using a gold standard randomised control trial method. Results from the study fed directly into the green paper.

In case it’s not clear already – this is a significant event. It’s the first time that evidence from an empirical study run by MHCLG (previously called DCLG) has shaped its strategy.

Timely evidence

The evidence is timely. Studies into effective community integration approaches are few and far between, and this prompted us to question a long-held assumption that learning English leads to better integration.

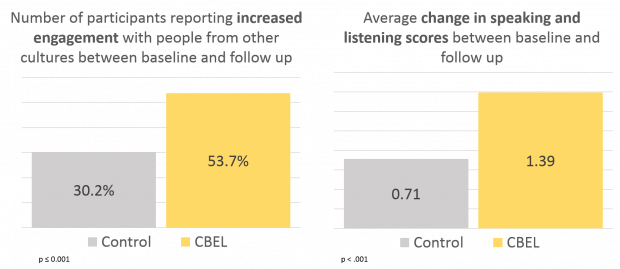

This study, a wait-listed Randomised Controlled Trial, provided compelling evidence that the Community Based English Language course really does work, with participants scoring greater improvements in English tests and on integration measures than the control group.

For many, a project like CBEL would be considered too challenging to evaluate using an RCT approach, but we considered it to be exactly the right type of project to undergo this kind of robust inquiry. Its results may not seem earth-shattering – after all, CBEL did what it was designed to do – but they are an important step forward in efforts to improve and add to the evidence on ‘what works’ to strengthen integration. Reliable evidence is particularly important in an area such as this, where government intervention could have an adverse effect on integration.

How did we do it?

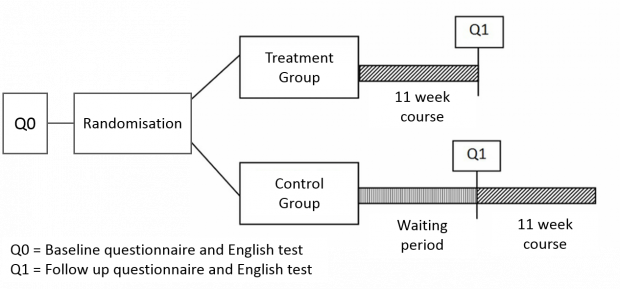

CBEL is an 11-week language programme that is focused mainly on women from non-English-speaking South Asian backgrounds. To evaluate its effectiveness, we divided participants randomly into a treatment group (those who would take part in the English language course) and a control group (those who would not receive the training until a few months later) so that we could compare how much progress each group had made later on.

We chose to use a wait-list design – delaying the start date of the control group’s classes, rather than preventing them from receiving the training. This way everyone who was eligible for the course in those communities could receive it. The RCT was rolled out in five areas – Manchester, Rochdale, Oldham, Kirklees and Bradford. The treatment group started in May 2016, and the control group the following September.

Using a waitlist design meant that nobody "missed out" on the intervention.

The first task for MHCLG social researchers was to work with the providers to ensure that there were enough eligible people who could be recruited and assigned into both the control and treatment groups, so that the evaluation could provide robust results. This also meant accounting for participants who might drop out before or during the course (otherwise known as attrition).

We also had to prevent contamination, where members of the control group are exposed directly or indirectly to the intervention. This might happen if somebody in the May class told somebody in the September class about what they had learned in their lessons, which would skew results. Making this process work well in a community context meant we had to plan for the likelihood of two or more friends who wanted to attend the course together. We opted to assign friends randomly together, to either the control or treatment group.

Overall, for the trial, 527 people took part in the research: 249 in the treatment group and 278 in the control group, with about 60% of participants providing data at all stages in the study. Although this may look like a small number, it was sufficient enough to test and measure the indicators we had selected. These included the person’s ability to understand, speak, read and write English, and changes in their propensity to interact with other cultures.

The results

The trial showed significant results in favour of the English course. We compared how much the English skills and integration measures of both groups had changed over the duration of the treatment group’s course and found that both sets of outcomes had increased at a significantly faster rate for those attending the classes.

The trial results gave all of us – policy makers, researchers, course providers, and communities – the evidence that the English Language programme was working as intended and provided us with specific insights on where it was working best.

Taking a step back, the trial also demonstrated that we could test the effectiveness of a social integration project in real-life community settings using very robust methods, and bringing participants on-board in the process. It has encouraged us to consider these methods in our future research.

Lessons learned

Designing the trial meant dealing with some practical design challenges including a tight timetable, producing new questionnaires for participants with a limited grasp of English, and running a complex trial under real world conditions.

We also had to take into account more general practical matters for participants. Parents with young children had greater childcare responsibilities over the summer months and the religious season of Ramadan took place during that time too, which impacted on people’s availability to attend their courses.

What made it possible for us to gather meaningful results in spite of these challenges was close working across all teams involved in the project (course providers, evaluators, participants and government analysts). For that reason, it is something we’ll look to replicate in future trials.

The CBEL RCT project was designed by MHCLG social researchers working closely with policy colleagues, the National Learning and Work Institute, who were contracted to undertake the research, The Behavioural Insights Team, and the Cross-Government Trial Advice Panel.

You can find the full report of the trial on the gov.uk website.

If you’re a British civil servant and are considering running a trial, you can get free advice from the Cross-Government Trial Advice Panel. Get in touch: trialadvicepanel@cabinetoffice.gov.uk.

Leave a comment